Create ML App

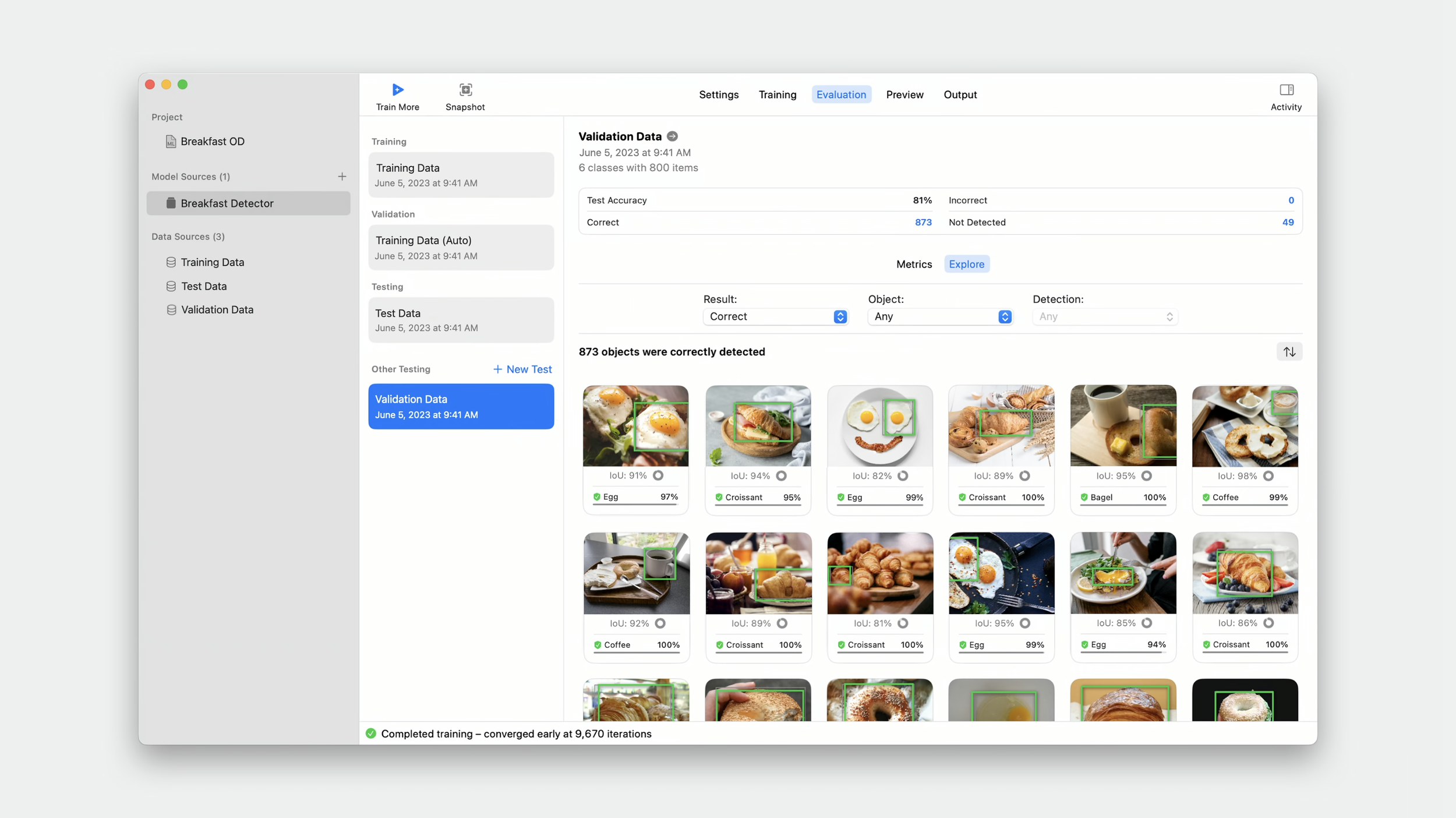

I was design owner for the Create ML app, and launched a new model evaluation user experience & visual system for the app, and new vision-based models such as object detection, image classification, and multi-label image classification.

May 2022 – June 2023

Launches: WWDC 2022, 2023

Our team had the ambition to make model evaulatuion simple, visual and accessible for anyone. I designed for the Create ML app, Core ML system, and connection points with XCode.

Visual evaluation for Image Classification and Object Detection models

Interactively learn how your model performs on test data from your evaluation set. Explore key metrics and their connections to specific examples to help identify challenging use cases, further investments in data collection, and opportunities to help improve model quality.

These product ideas originated from our team and myself. I illustrated the design vision from zero, before features & new models existed. We ended up launching…

See the demo here

Data previews with continuity on MacOS, iPadOS

Visualize and inspect your data to identify issues such as wrongly labelled images, misplaced object annotations.

See the demo here (4:45).